Vox Augmento VR Music Unity Max/MSP

Vox Augmento represents an attempt to leverage VR technology for creative musical expression. This experience allows you to easily create your musical interface in real time with a three-dimensional paintbrush and the ultimate synthesizer—your voice. As you paint in space, audio is recorded and mapped to that brushstroke. Winner of best technical achievement at the 2017 Seattle VR Hackathon.

VR Music Innovation

Created a unique musical instrument that could not be realized outside VR, enabling natural movement-based musical expression.

3D Sound Painting

Designed an intuitive interface where users paint sounds in 3D space using voice and controller movements, with real-time audio modulation.

Hackathon Winner

Awarded best technical achievement at the 2017 Seattle VR Hackathon for innovative use of Unity and Max/MSP integration.

Accessible Design

Built for both novice and experienced musicians, with low barrier to entry but high expressive potential for mastery.

Problem

Traditional music creation tools rely heavily on skeuomorphic principles, replicating real-world instruments in digital space. VR technology offers a new medium for creative expression, but most music apps in VR still follow conventional patterns. The challenge was to create a musical instrument that could not exist outside VR, leveraging the unique capabilities of immersive 3D space for natural, movement-based musical expression.

Solution

I designed and developed Vox Augmento as a VR-centric music tool that makes fluid bodily expression inseparable from musical expression. Users paint sounds they make into the VR environment using their voice and controller movements. The shape of the painting alters and modulates the sound, and painted sounds can be interacted with to replay them. This approach unlocks latent musical abilities in new users while extending the capabilities of experienced musicians.

Impact

- 🏆

- Best Technical Achievement

- 48hr

- Development Time

- 100%

- VR Native

- 3D

- Spatial Audio

Technology

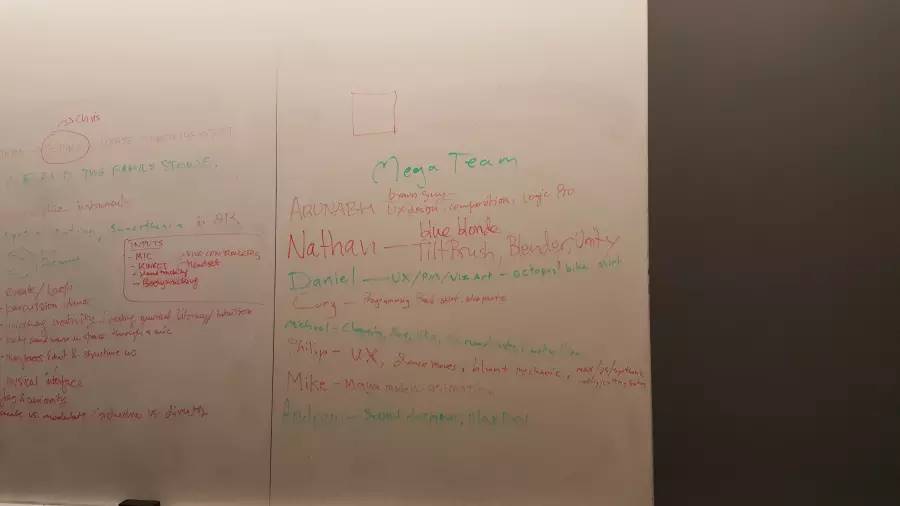

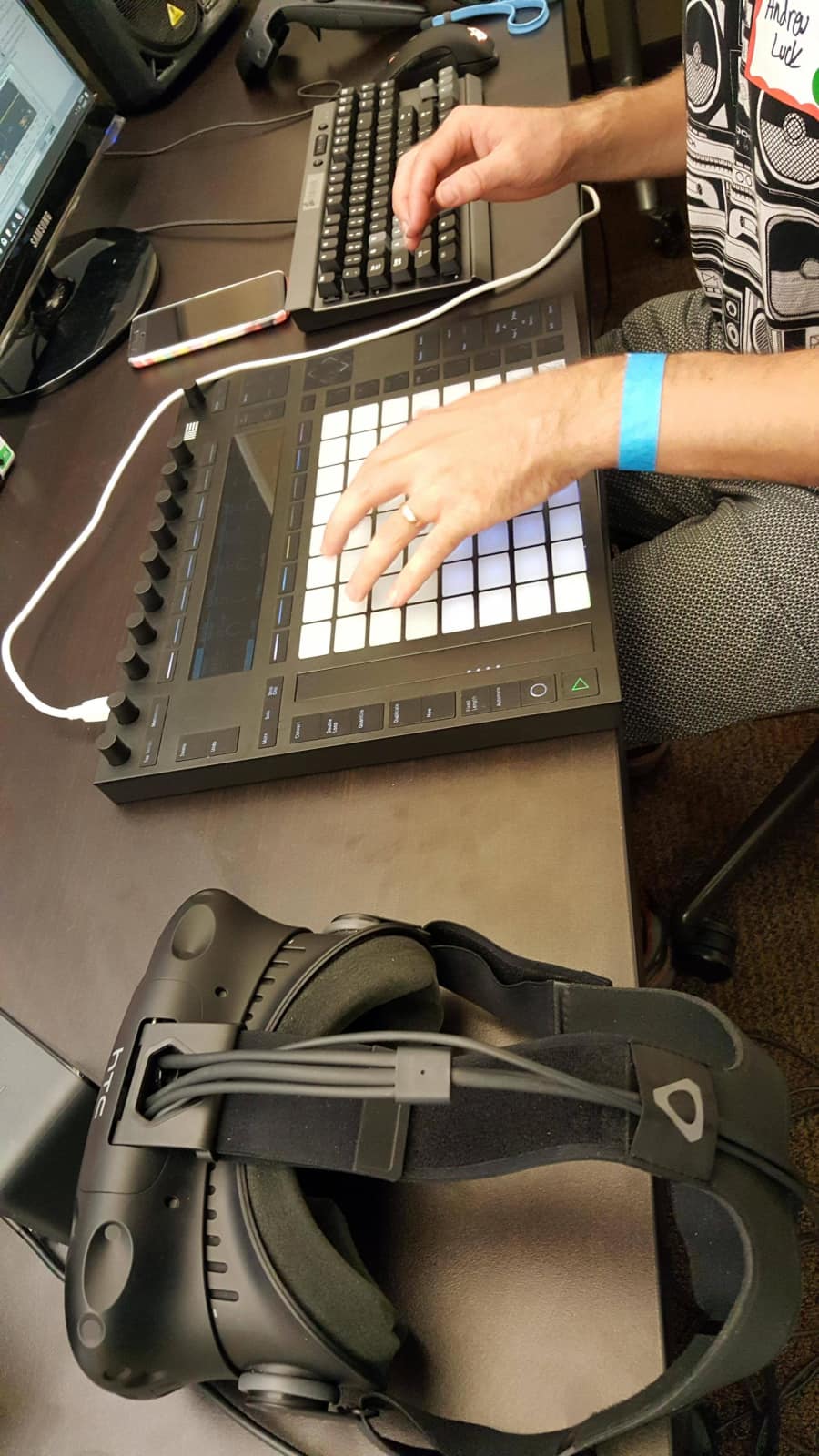

We selected technologies that enabled real-time audio processing and immersive VR experiences:

Design Philosophy

The design was guided by several key principles:

- Sonic Interaction: Making bodily expression inseparable from musical expression

- Visual Understanding: Users can revisit their actions in VR space, knowing what they do exists in space

- Experimentation: Minimizing menus and modes to encourage free exploration and discovery

- Mastery: Low barrier to entry with high expressive potential for those seeking mastery

Product

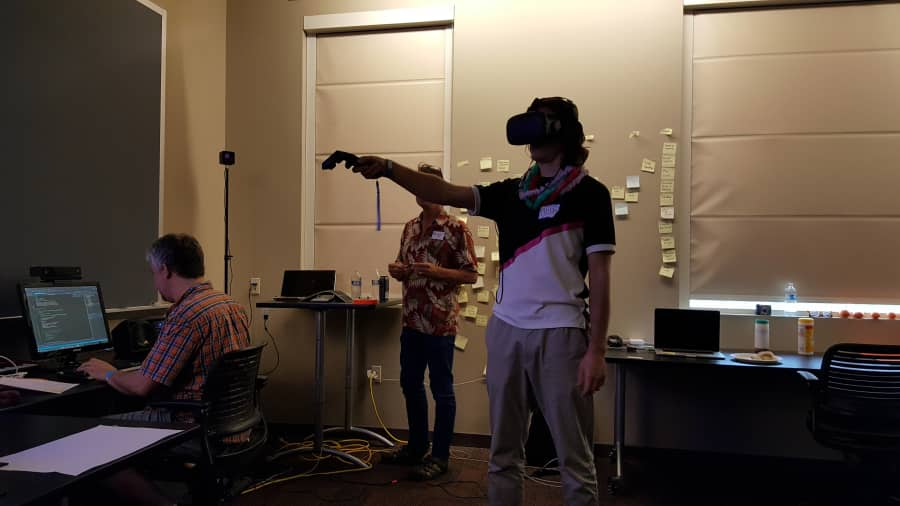

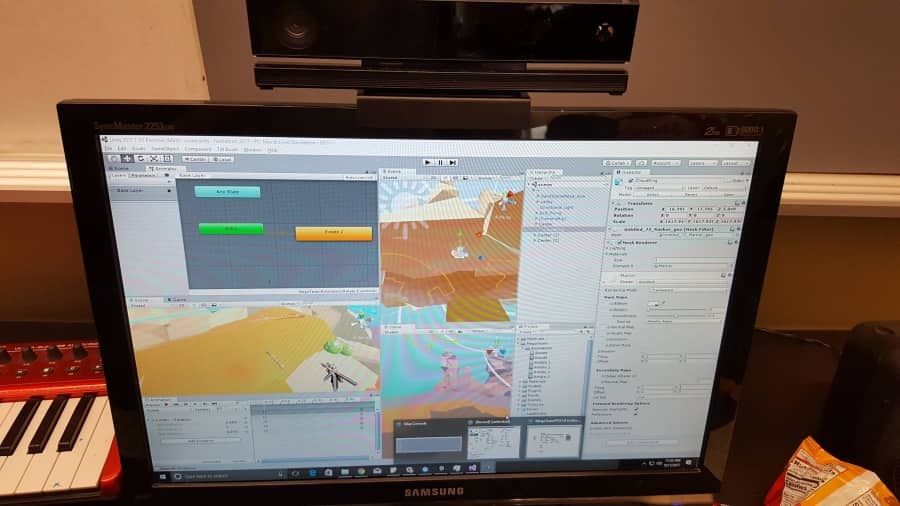

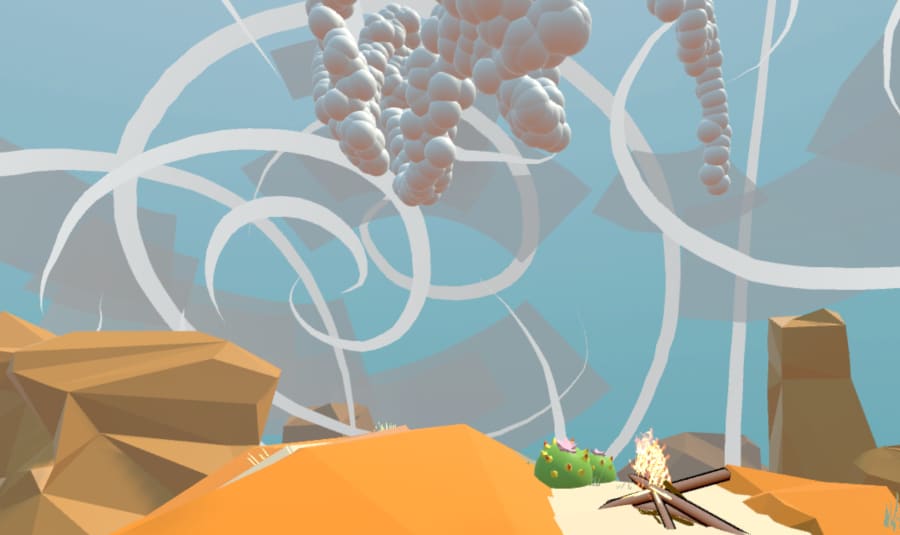

The MVP allowed users to paint what they speak as if placing a waveform in air. Moving the controller higher raises pitch, lowering it decreases pitch. Colliding with the painted waveform replays the sound. The visual setting places users on a rock landscape platform, with a bone musical wand controller representing an ancient paintbrush.

Real-time Audio Processing

Integrated Unity with Max/MSP for real-time audio modulation and effects, enabling immediate feedback as users paint sounds in 3D space.

Intuitive Interaction

Controller trigger records spoken sounds and paints them as bubbles in the VR world. Movement during recording modulates the sound on playback, creating unique sonic textures.

Discoverable Mechanics

The instrument makes its limits and functions easily discoverable, encouraging both novices and experts to create amazing experiences through experimentation.

Development

Development followed a three-step approach: First, establishing real-time link between Unity and Max/MSP to get controller and collision data into the audio engine. Second, parallel development to produce working assets and interactions, including recording, modulation, and controller movement mapping. Third, polish and final touches for the hackathon demo.

Successes

The project achieved recognition and demonstrated the potential of VR for musical expression:

- Won best technical achievement at the 2017 Seattle VR Hackathon

- Demonstrated that VR can unlock latent musical abilities in new users

- Created a novel mode of expression that extends capabilities of experienced musicians

- Proved that musical instruments can be designed specifically for VR, not just ported from traditional interfaces

Demo

Users were handed one controller with little instruction beyond the intent of the app. The goal was discovery—the usage and discovery was something developers couldn't fully predict. The demo evoked new usages and practices not initially envisioned.

Conclusion

Vox Augmento demonstrated that VR technology can create entirely new forms of musical expression that are impossible in traditional interfaces. By making bodily movement inseparable from sound creation, we created an instrument that is both accessible to novices and expressive enough for masters. This project exemplifies how emerging technologies can unlock new creative possibilities when we move beyond skeuomorphic design patterns.